...

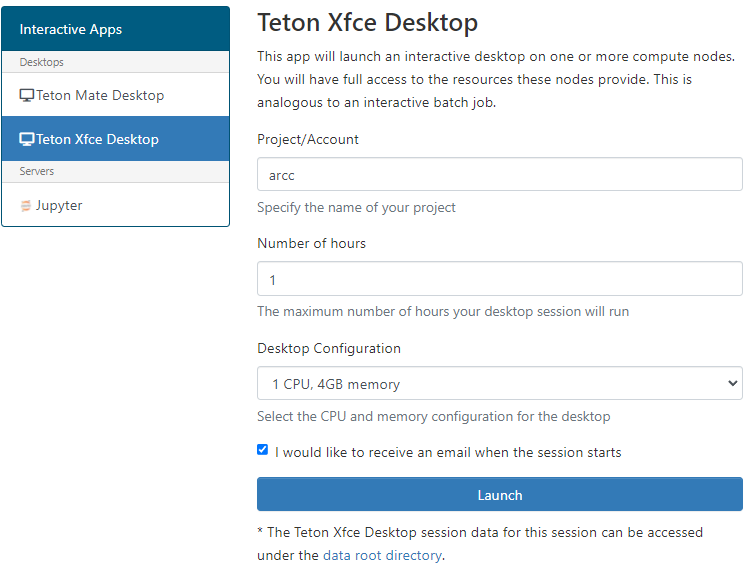

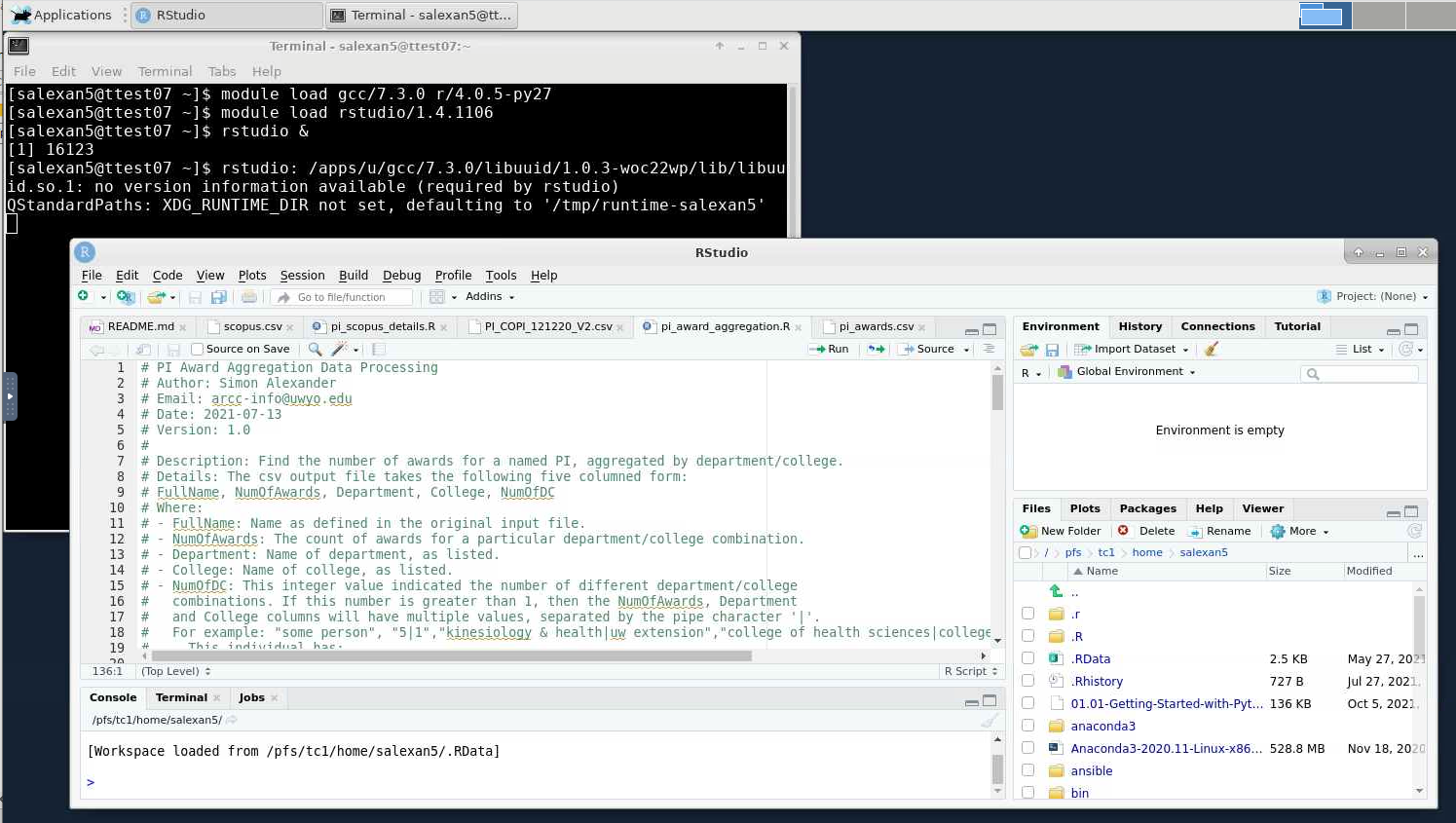

Consider these desktops as simple GUI type environments providing basic file management and access to some GUI type applications, and use for developing, testing and running simple/small jobs. When you need multiple nodes, significantly more cores and memory, then you’d submit a job using sbatch.

Steps:

Define account / walltime and desktop core/memory allocation.

The maximum walltime allowed is 168 hours (seven days).

We would ask you to remember our general fairshare policy for cluster use, and try to request only the time you need and not to leave long sessions unused.

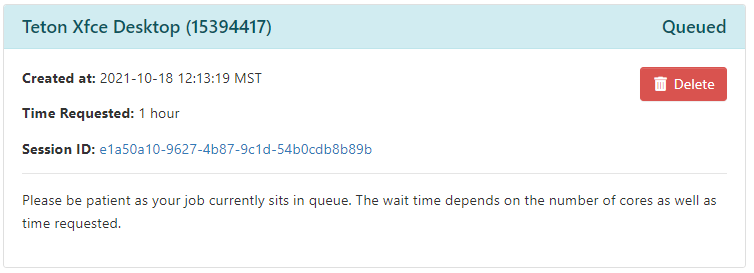

Wait for job to be allocated. You will see a message detailing that your job currently is sitting in the queue.

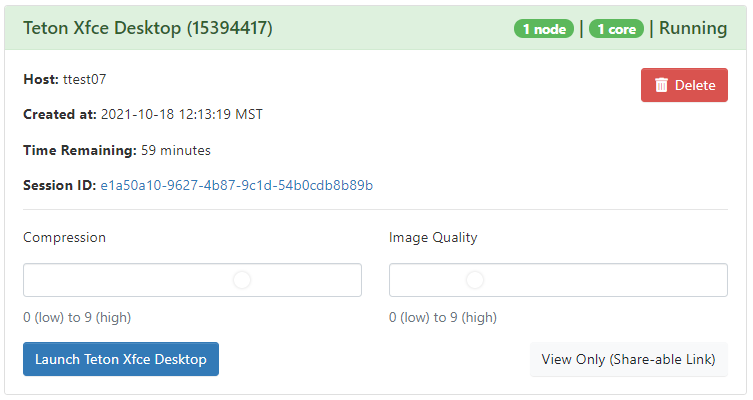

Launch

...

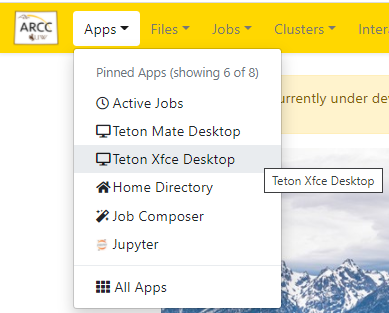

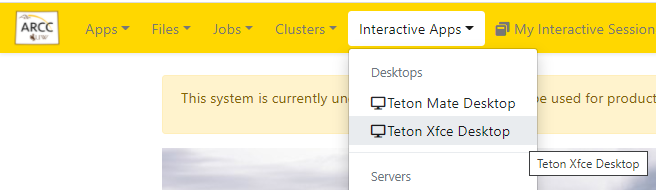

Beartooth Mate/Xfce Desktop. Which you choose is down to personal choice.

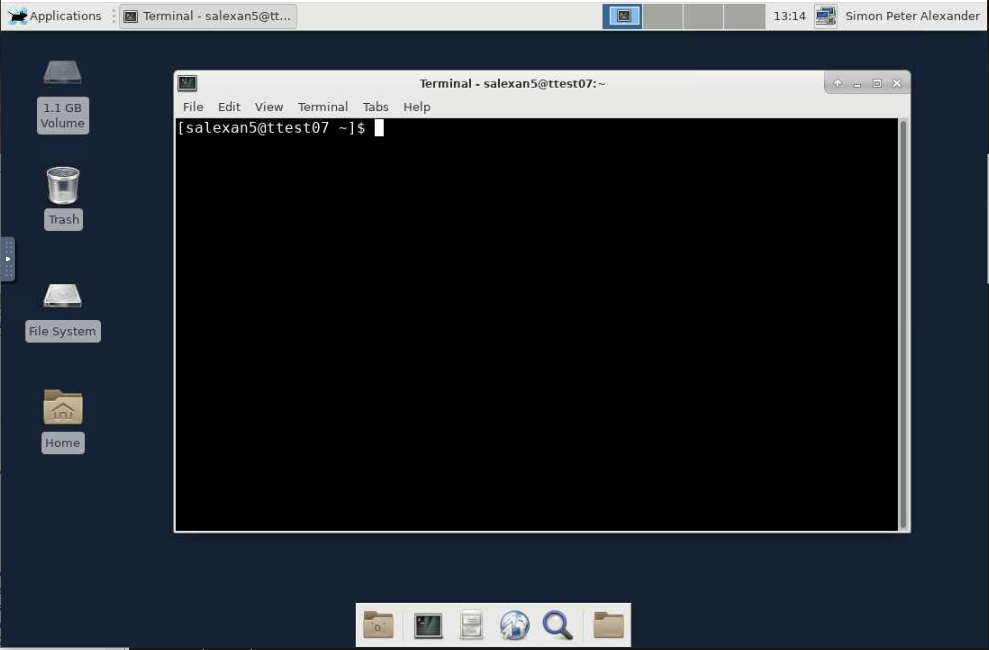

Open a terminal within the desktop - notice you’re on a compute node.

If you close the Desktop App tab, you can navigate to ‘My Interactive Sessions’ and re-launch the desktop. Your session will remain active while you still have ‘Time Remaining’.

Re-launching the desktop will remember what you had opened before closing the tab. This is different to logging out or shutting down.

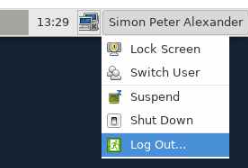

Once finished, Log Out/Shutdown your desktop - they both cancel your underlying slurm job and free up resources.

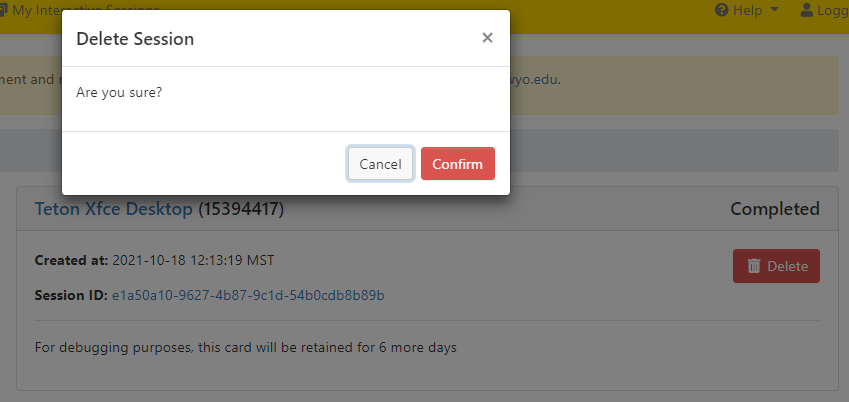

Once finished, navigate to “My Interactive Sessions' to view and clean up the related card.

Clicking on the Seesion ID will open up a tab that navigates to the associated folder in your home folder.

Every session you start will have a related folder named using the assigned session id, that can be found in your home folder within the ondemand folder.:

| Code Block |

|---|

[salexan5@tlog1 output]$ pwd /home/salexan5/ondemand/data/sys/dashboard/batch_connect/sys/bc_desktop/tetonBeartooth_xfce/output [salexan5@tlog2 output]$ ls 18ba0ee4-1140-498b-84c4-caf8befe05ce 5e5ce3da-e4d1-431a-82f7-acc6c7fe2e5f 926b73b0-ecc5-4939-89cd-58afc8dcc68b ec5b165d-f38b-4693-a296-13e4fc88398a 1e13d9aa-b247-425b-af49-4859d1948d4a 71596aa6-96b4-4222-9ff2-c8c57b6f4ce3 92fd1acf-40ef-4ca7-96c2-699af7529877 2b957278-2451-4497-9bbb-ab03de0ae3dd 7573613a-ba2f-4181-bba1-e70c3a75c82d e1a50a10-9627-4b87-9c1d-54b0cdb8b89b 59080c85-595f-4df0-b17b-7372ff500bab 8519c711-8dde-48e6-b160-6ac14bc4a2c5 eaede1f6-ed3f-42fd-8228-a2d375ddc715 [salexan5@tlog2 output]$ ls 18ba0ee4-1140-498b-84c4-caf8befe05ce/ before.sh connection.yml desktops job_script_content.sh job_script_options.json output.log script.sh user_defined_context.json vnc.log vnc.passwd |

...