...

You are required to have an account on the Beartooth Cluster to access the site. (See here to get started with ARCC)

You do not need a VPN connection to work with SouthPass.

You will need 2-factor authentication setup (Two-factor Authentication )

| Excerpt | ||

|---|---|---|

| ||

Connecting to:SouthPass is available on and off campus from the https:// |

southpass.arcc.uwyo.edu website. |

When browsing to the site, you will be presented with UW’s |

wyologin page:

If provided with an option select one now, to authenticate. Otherwise, you should have received your default method for 2 factor authentication. After authenticating through a two factor mechanism, you should see the southpass dashboard. |

Interacting with:

Once you are logged into SouthPass you will be presented with a web interface that looks similar to the following:

...

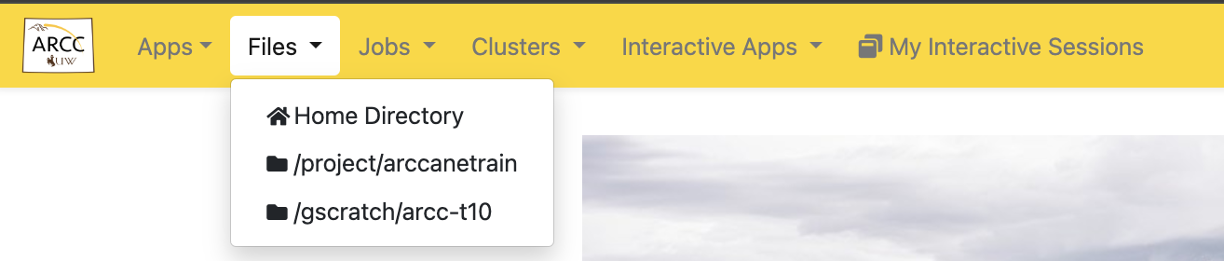

The gold menu bar across the top of the page, which contains tabs for:

Apps - All Applications

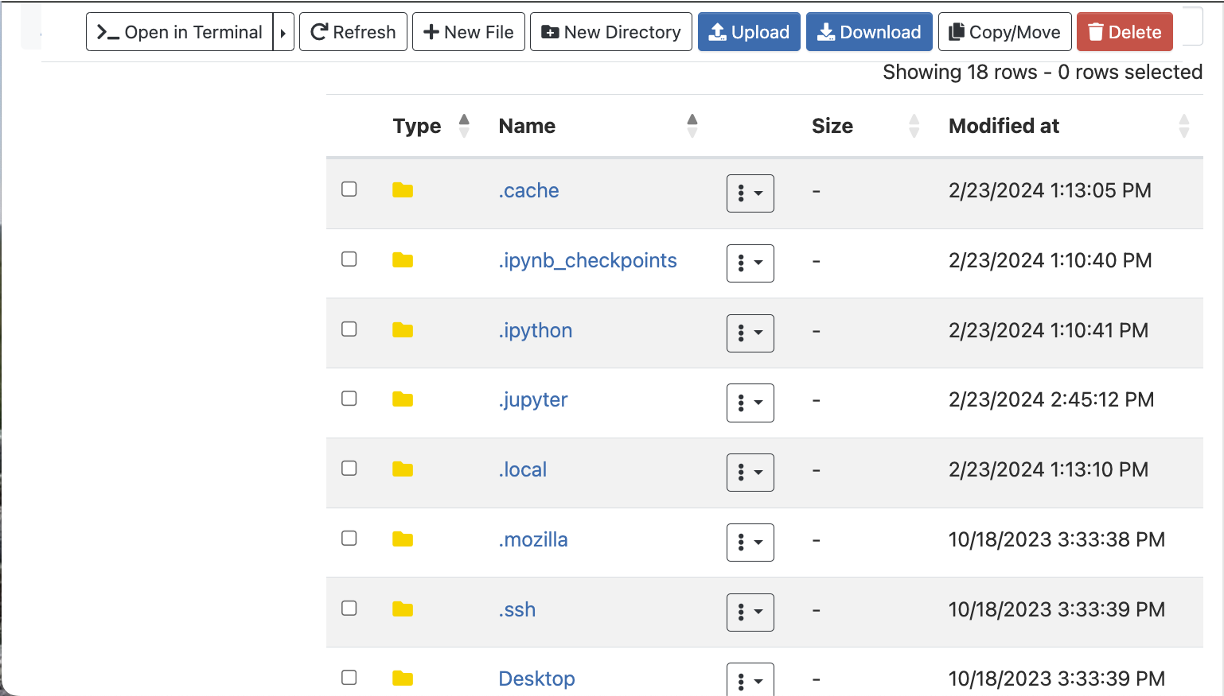

Files - Allows you to work with files from different authorized file systems

Jobs - Job management tools

Clusters - Access to and status of different clusters

Interactive Applications - Allow you to run specific tasks or software

Message area, the light yellow ribbon below the task bar, displays useful messages from the system. This is only visible is there is a message.

Pinned Apps, the area on the bottom left of the web page, contains quick access tiles to different applications supported on SouthPass.

Message of the day, located in the bottom right corner of the web page, displays any important information about the current day.

...

| Excerpt | ||

|---|---|---|

| ||

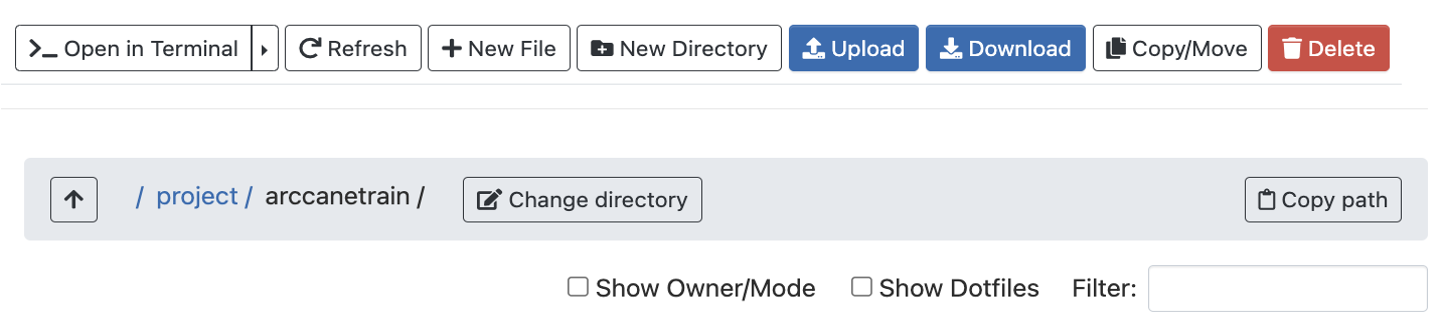

Accessing Your FilesNote: The current max file size is limit is set to 10GB. You should then see your home directory on beartooth, but may also access your project or gscratch space: File and directory modification options are available along the top of this window: “show owner/mode”: Show who owns the file/directory (often helpful in shared Project folders). “show DotFiles”: Show hidden files. The Files drop down allows you to see your different storage space that you have access to. After selecting a storage space, you will be shown the files in that area. You can then:

|

Note: The current max file size is limit is set to 10GB.

Jobs Dropdown

From the Jobs drop down, you can either view your jobs or all jobs currently running on the Beartooth Cluster. From the Job Composer subpage, you can manage all aspects of running jobs on the Beartooth Cluster:

...

Custom prompts may not show up in the shell windows. To get the prompts you can remove the PS1 setting from your .bashrc or manually reset the PS1 variable in the shell window.

On the desktop launch windows the Compression and Image Quality sliders fail to show the current setting in the slider as detailed at https://github.com/OSC/ondemand/issues/1384

While using the XFCE Interactive Desk, clicking on Home / File System desktop icons.

Resolve: Remove the

.gvfsfolder in your home folder.

...

Current User Load

SouthPass is a special partition on the cluster, currently with up to two nodes available, and a total of (2 x 28 =) 56 cpus.

...

Whenever a user starts an interactive GUI Desktop they are actually requesting a Slurm job across this partition. We do currently have some limit on the maximum number of users, and this will depend on what configuration users request i.e. 1/2 or 4 cpus and how they can be allocated across the nodes.

The number of current users will depend on the number of cores each individual user requests, but we have tested the service with 38 concurrent desktops running different combinations of cores.

...